Álvaro García

Lameness in dairy cows significantly impacts their well-being and productivity, leading to pain, reduced milk yield, and early culling. Traditional diagnosis methods are labor-intensive and subjective, underlining the need for automated solutions. While various techniques have been explored, they often require highly specialized equipment and cannot evaluate multiple lameness indicators at once. Deep learning, a powerful form of machine learning, offers a promising solution by employing algorithms that recognize patterns in large datasets, such as images or videos. Convolutional Neural Networks (CNNs) are a prime example of this approach. They are specialized networks trained to identify patterns in visual data, enabling them to automatically detect lameness indicators from standard video cameras, 3D cameras, and other imaging technologies. This enables the simultaneous assessment of multiple cattle, providing an objective and efficient alternative to traditional methods.

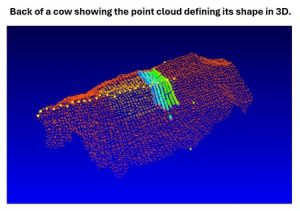

Convolutional neural networks (CNNs) are essential in 3D imaging for processing volumetric data, such as in 3D object recognition. These deep learning algorithms consist of multiple layers, including convolutional layers that apply filters to detect features like edges, textures, and shapes. Unlike 2D CNNs, which handle flat images, 3D CNNs operate on three-dimensional data, considering height, width, and depth. These 3D filters, or kernels, slide over the input volume in all directions, detecting patterns and features crucial for tasks like pose estimation and lameness detection in cows. By identifying curvature and structural details within the point cloud, 3D CNNs capture spatial relationships and depth information. By extending 2D CNN principles to three dimensions, 3D CNNs effectively analyze volumetric data, enabling detailed feature extraction and advanced classification tasks, such as accurately detecting lameness in cows based on subtle posture and movement differences.

Current research

Barney et al. (2023) conducted a study in the UK with the goal of developing a fully automated system to detect lameness in multiple cows. Three independent mobility scorers scored a total of 250 cows for lameness. An algorithm called Mask-RCNN tracked cow poses and analyzed correlations between these stances and their lameness score. It identified significant associations between back key-point positions, head position, neck angle, and lameness. Additionally, another algorithm called CatBoost, assessed feature importance for lameness detection, distinguishing between different mobility categories. In summary the Mask-RCNN pose estimation algorithm played a key role in identifying critical anatomical points essential for lameness detection. The CatBoost tracking algorithm on the other hand, complemented it by recording cow features over time, enabling gait analysis and enhancing lameness detection accuracy. The algorithms accurately classified cows into four lameness categories (0–3) surpassing previous studies that typically offered only binary (lame-not lame) classifications of lameness. Back posture emerged as a promising feature for automated cattle mobility scoring, exhibiting accuracy above 90%.

Investigation into the cow’s posture involved using five specific points—tail setting, lower neck/scapula, withers, center of the back, and hook bone—to estimate the area of its back. This method entailed drawing a baseline connecting the tail setting to the lower neck/scapula and connecting these key points with top lines. Perpendicular lines were then drawn from intermediate points (withers, center of the back, hook bone) to the baseline, with parallel lines extending from the central perpendicular line to the second and fourth key points. This approach enabled the calculation of the back’s area by summing the areas of four triangular and two rectangular sections, offering a detailed estimation of its curvature.

Head position analysis was crucial, and it involved extending the line of best fit from the “Back posture analysis” and computing the squared distance from the nose and head key-points to this regression line. This measurement determined whether the head position was above or below the regression line. Further analysis of head position included examining the neck angle relative to the back. This was achieved by calculating the gradient of the line from the front shoulders to the lower neck/scapula, then determining the gradient of the line connecting the lower neck to the head. The angle between gradients was calculated to assess the alignment of the neck with the back.

This study marks a significant advancement in on-farm lameness detection in dairy cows by developing a fully automated, high-accuracy system using deep learning and machine learning techniques. It enables early detection and assessment of lameness severity, allowing for prompt intervention and treatment. Early detection improves animal well-being, reduces economic losses, and boosts productivity. Additionally, the non-invasive nature of this technology ensures minimal disruption to farm operations, making it practical for widespread adoption.

Farm application of this research

Recent advances have turned these research findings into advanced 3D cameras that monitor locomotion scores in cows. These cameras use algorithms to track the well-known 5-point locomotion score used globally by veterinarians and dairy farmers. By integrating cow IDs from RFID readers and cow images, these systems predict locomotion score changes during each milking session throughout lactation. This technology operates like having a locomotion score expert assess your cows multiple times daily, automatically transferring results to your computer for analysis alongside milk production changes. Additionally, the cameras monitor body condition changes, providing insights into the impact of locomotion scores on body condition and milk production.

Let’s look at cow 570, for example, a primiparous cow at 133 days in milk. Her locomotion score shows that something happened at calving, causing a nearly 1-point drop in her locomotion score almost right away. Although she recovered her stance transiently a few times, she consistently remained one point below her baseline. She never really peaked as expected and she was as much as 40 pounds under her expected production. Her body condition score (BCS) has been erratic but always above the ideal for this stage of the lactation. This suggests that while her locomotion issues have affected her production, they were not severe enough to impact her feed intake significantly and, consequently, her body condition.

Cow 570’s reduced milk production directly impacts her economic return, when compared to her predicted production. To improve her profitability, it is very important to address the underlying locomotion issues by Immediate intervention, such as a thorough veterinary examination, and corrective hoof trimming. If possible, modifying her living conditions to reduce stress and injury risk should also be considered. Additionally, implementing a more targeted feeding strategy to support her recovery without compromising her BCS could help optimize her overall performance. Continuous monitoring of her locomotion and BCS will be essential to ensure any adjustments lead to sustained improvement in both her health and productivity.

The research study referred to in this article introduces an automated system for detecting lameness in dairy cows using advanced deep learning and machine learning technologies. It represents a significant advancement in farm management by enabling early detection and classification of lameness, which can enhance animal well-being, reduce economic losses, and increase productivity. Integrated into 3D cameras, the system monitors locomotion scores based on the widely used 5-point scale, leveraging RFID cow IDs and images to predict changes throughout lactation. This technology acts like a daily assessment by a locomotion expert, automatically transferring and analyzing results alongside milk production data.

© 2025 Dellait Knowledge Center. All Rights Reserved.